Analytics Development Accelerator (ADA)

ADA is a cloud native software-as-a-service (SaaS) that can be used to automate majority of task related to implementation, operation and maintenance of modern data warehouse -and data platform environments. ADA targets Azure Synapse Analytics and Azure Data Factory V2 to produce native implementation to the target. Main features of ADA are metadata driven development, version control, integrated deployment, support for external work loads, work flow orchestration and data editor for analytical master data management.

Data Flow

- Deploy into Azure Synapse Analytics, Azure Data Factory V2 or Azure Databricks, zero lock-in

- Deployment –and version control over multiple environments

- Document data flows using purpose build template dialogs and deploy as actual code into target system

- Automated workflow generation

- Speed up data warehouse implementations +70%

- Better quality when generating implementation based on metadata.

- Regenerable implementations in case of best practice and/or platform changes

- Standardized implementations for easier application management services

Work Flow

- Execute common cloud and on-premise analytical workloads such as pipelines / suspend / resume / scale / process, …

- Deployment –and version control over multiple environments

- Monitor and recover loads

- Purview data lineage publish (Automated and scheduled publish of data lineage done with ADA)

- Notice that work flow is controlled on ADA side and thus can be considered lock-in

Data Editor

- Modify live data directly in database

- Commonly used for configuration and analytical master data management

- Implement business rules with post execute SQL

Operating Environment

ADA itself is also Azure software-as-a-service (SaaS) meaning no installations required. Still the end results are deployed to your own Azure subscription.

Modern Data Warehouse with Microsoft Fabric

- Suspend/Resume/Scale Microsoft Fabric capacity with Work Flow -module

- – 4. Orchestrate and control data pipelines and notebooks with Work Flow -module. Define and generate data flows with Data Flow -module using easy-to-use user interface. Access and modify live data from SQL endpoints using Data Editor -module

- Control all your data platform environments. Deploy and monitor with ease using ADA functionalities

Notice that idea of ADA is to automate tasks that developer would otherwise do manually or with some other automation tool such as Apache Airflow and/or dbt. It is also entirely optional e.g. to use Data Flow module to define and generate data flows. It is common to create data flows manually to bronze -and silver layers and use ADA to generate gold layer data flows that usually require complex SQL code. Usage of other ADA modules is naturally also optional.

Modern Data Warehouse with Azure Synapse Analytics

- Define and generate data flows with Data Flow -module using easy-to-use user interface

- Use parameterizable ADA templates to work with Azure Databricks. Implement archive, change data capture (CDC) and data delivery on top of Azure Data Lake Storage with provided Azure Databricks Notebook templates

- Orchestrate and control data platform loads with Work Flow -module and use Data Editor -module to modify live data e.g. analytical master data or configuration data

- Document data lineage into Azure Purview with Work Flow -module. Utilize repositories with Data Flow -module deployments to safeguard implementation and track changes

- Control all your data platform environments. Deploy and monitor with ease using ADA functionalities

Modern Data Warehouse with Azure Databricks (Unity Catalog)

- Define and generate data flows with Data Flow -module using easy-to-use user interface

- Use parameterizable ADA templates to work with Azure Databricks. Implement archive, change data capture (CDC) and data delivery on top of Azure Data Lake Storage with provided Azure Databricks Notebook templates

- Orchestrate and control data platform loads with Work Flow -module and use Data Editor -module to modify live data e.g. analytical master data or configuration data

- Automatic data lineage documentation with Azure Databricks data lineage. Utilize repositories with Data Flow -module deployments to safeguard implementation and track changes

- Control all your data platform environments. Deploy and monitor with ease using ADA functionalities

Modern Data Warehouse with Azure PostgreSQL

- Define and generate data flows with Data Flow -module using easy-to-use user interface

- Use parameterizable ADA templates to work with Azure Databricks. Implement archive, change data capture (CDC) and data delivery on top of Azure Data Lake Storage with provided Azure Databricks Notebook templates

- Orchestrate and control data platform loads with Work Flow -module and use Data Editor -module to modify live data e.g. analytical master data or configuration data

- Document data lineage into Azure Purview with Work Flow -module. Utilize repositories with Data Flow -module deployments to safeguard implementation and track changes

- Control all your data platform environments. Deploy and monitor with ease using ADA functionalities

Modern Data Warehouse with Snowflake

- Define and generate data flows with Data Flow -module using easy-to-use user interface

- Use parameterizable ADA templates to work with Azure Databricks. Implement archive, change data capture (CDC) and data delivery on top of Azure Data Lake Storage with provided Azure Databricks Notebook templates

- Orchestrate and control data platform loads with Work Flow -module and use Data Editor -module to modify live data e.g. analytical master data or configuration data

- Document data lineage into Azure Purview with Work Flow -module. Utilize repositories with Data Flow -module deployments to safeguard implementation and track changes

- Control all your data platform environments. Deploy and monitor with ease using ADA functionalities

Small Modern Data Warehouse

- Define and generate data flows with Data Flow -module using easy-to-use user interface

- Use parameterizable ADA templates to work with Azure Databricks. Implement archive, change data capture (CDC) and data delivery on top of Azure Data Lake Storage with provided Azure Databricks Notebook templates

- Orchestrate and control data platform loads with Work Flow -module and use Data Editor -module to modify live data e.g. analytical master data or configuration data

- Document data lineage into Azure Purview with Work Flow -module. Utilize repositories with Data Flow -module deployments to safeguard implementation and track changes

- Control all your data platform environments. Deploy and monitor with ease using ADA functionalities

User Interface

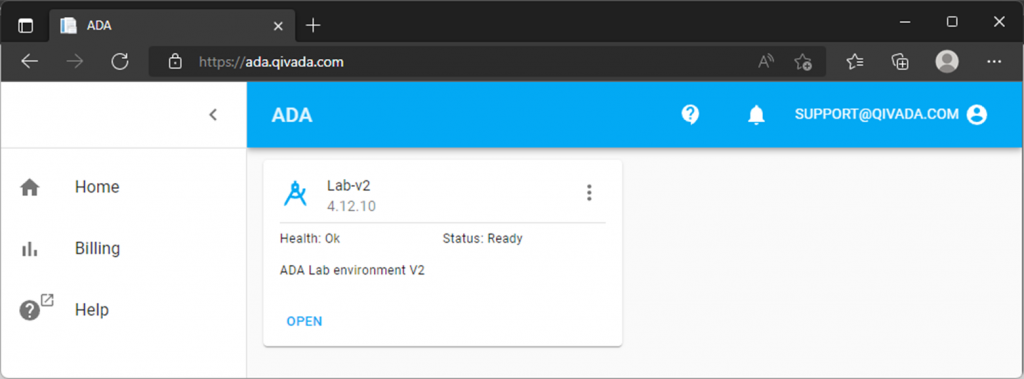

Landing Page

Access and manage ADA through common landing page.

Data Flow

Manage data flow definitions with structure of your own choice.

Build and manage data flow definitions with easy-to-use dialogs.

Based on data flow definition the data flow functionality is deployed into customer’s own Azure environment.

Data Editor

Manage data editor definitions with structure of your own choice.

Access and modify data with easy-to-use dialogs.

Work Flow

Manage work flow definitions with structure of your own choice.

Build and manage work flow definitions with easy-to-use dialogs.

Monitor work flow schedules across all environments.

Storage

Define database and file connections to be used with Data Flow and Data Editor.

Define custom SQL-query to be used as source for Data flow or Data Editor. Base tables can also be used.

Compare differences between databases.